Keras Embedding layer and Programetic Implementation of GLOVE Pre-Trained Embeddings | by Akash Deep | Analytics Vidhya | Medium

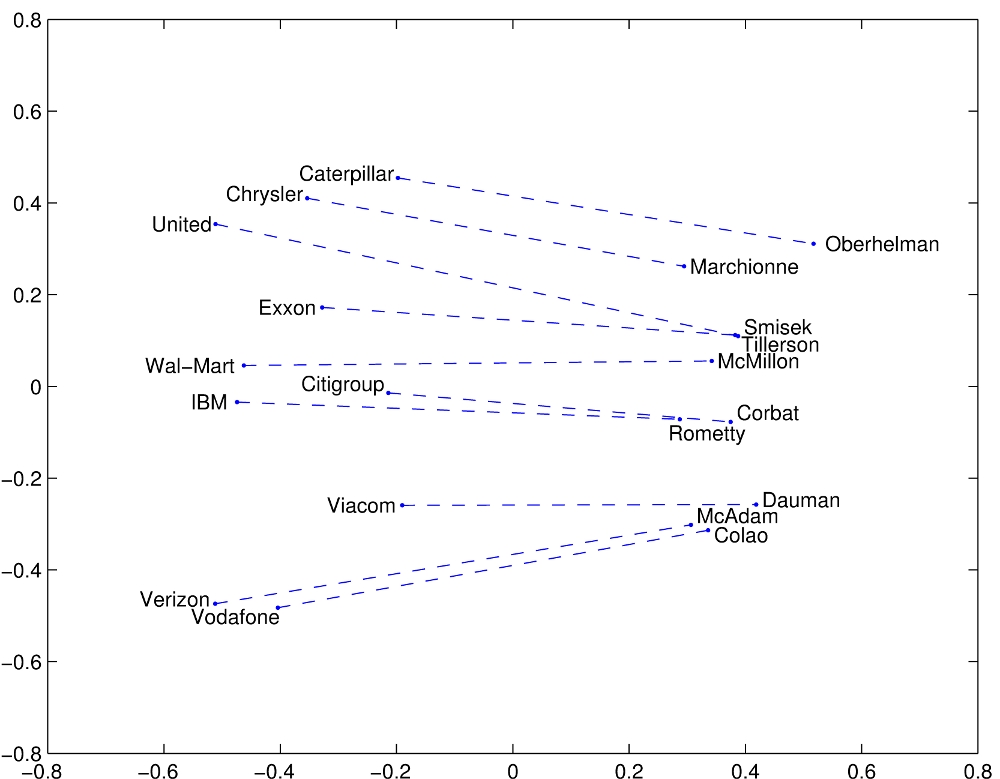

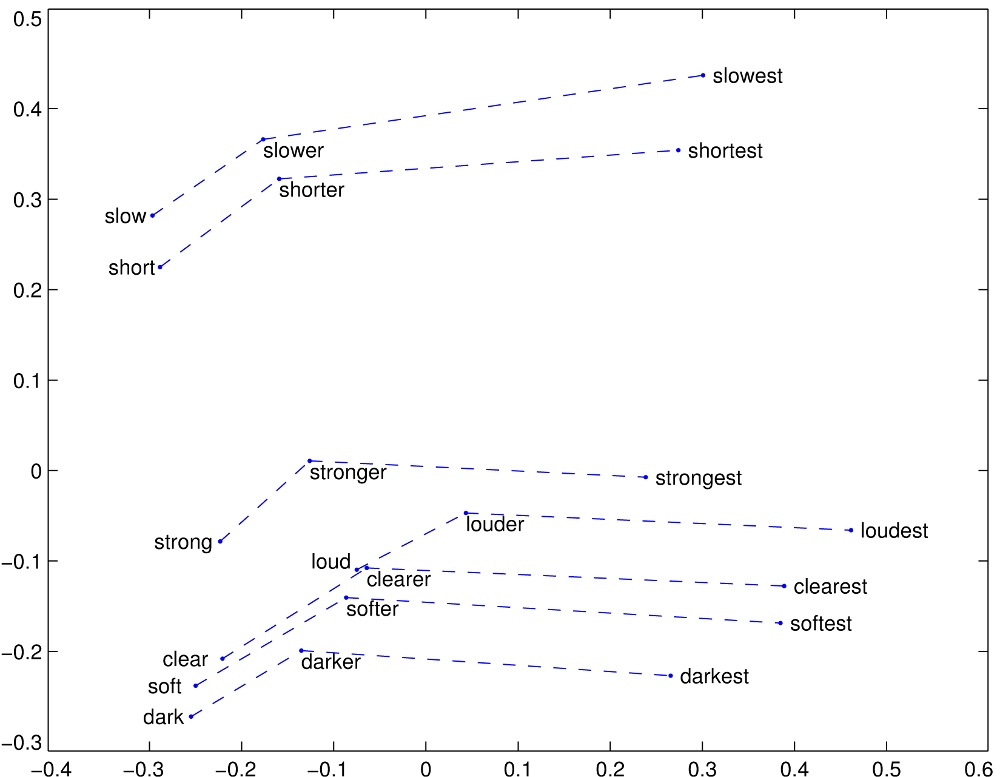

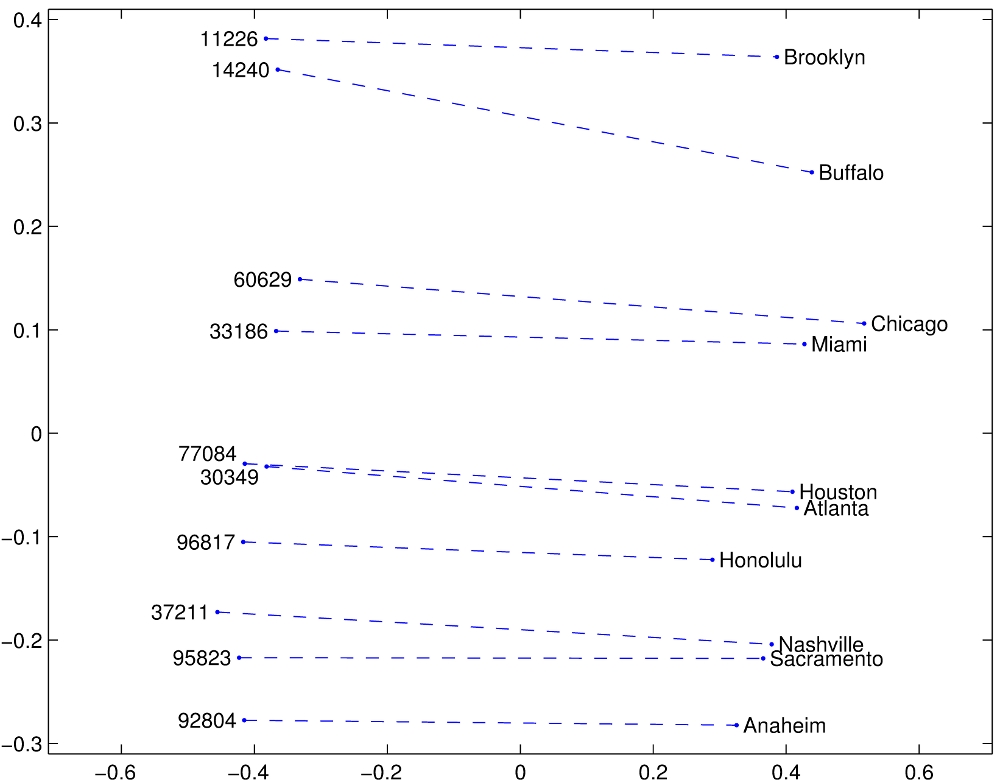

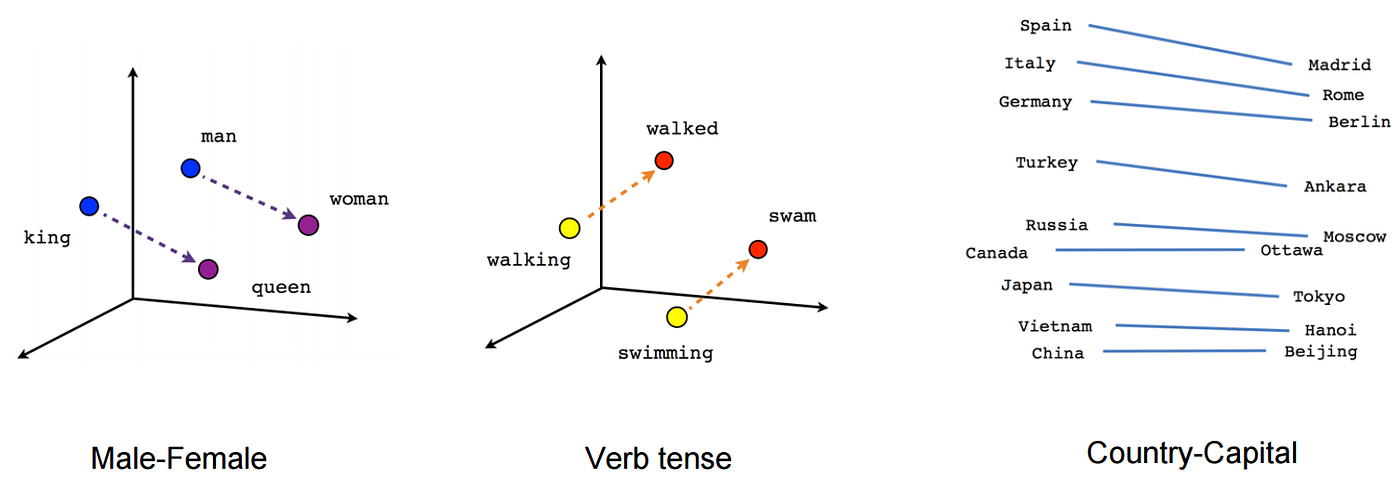

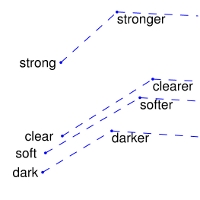

Math with Words – Word Embeddings with MATLAB and Text Analytics Toolbox » Loren on the Art of MATLAB - MATLAB & Simulink

General architecture of bidirectional LSTM that uses pre-trained GloVe... | Download Scientific Diagram

What are the main differences between the word embeddings of ELMo, BERT, Word2vec, and GloVe? - Quora

Implementation of Pre-Trained (GloVe) Word Embeddings on Dataset | by Prachi Gopalani | Artificial Intelligence in Plain English

![Loading Glove Pre-trained Word Embedding Model from Text File in Python [Faster] | by Sarmila Upadhyaya | EKbana Loading Glove Pre-trained Word Embedding Model from Text File in Python [Faster] | by Sarmila Upadhyaya | EKbana](https://cdn-images-1.medium.com/fit/t/1600/480/1*87Rlp-B3h7nUUG26mxU9AA.png)

Loading Glove Pre-trained Word Embedding Model from Text File in Python [Faster] | by Sarmila Upadhyaya | EKbana

CoreML with GloVe Word Embedding and Recursive Neural Network — part 2 | by Jacopo Mangiavacchi | Medium

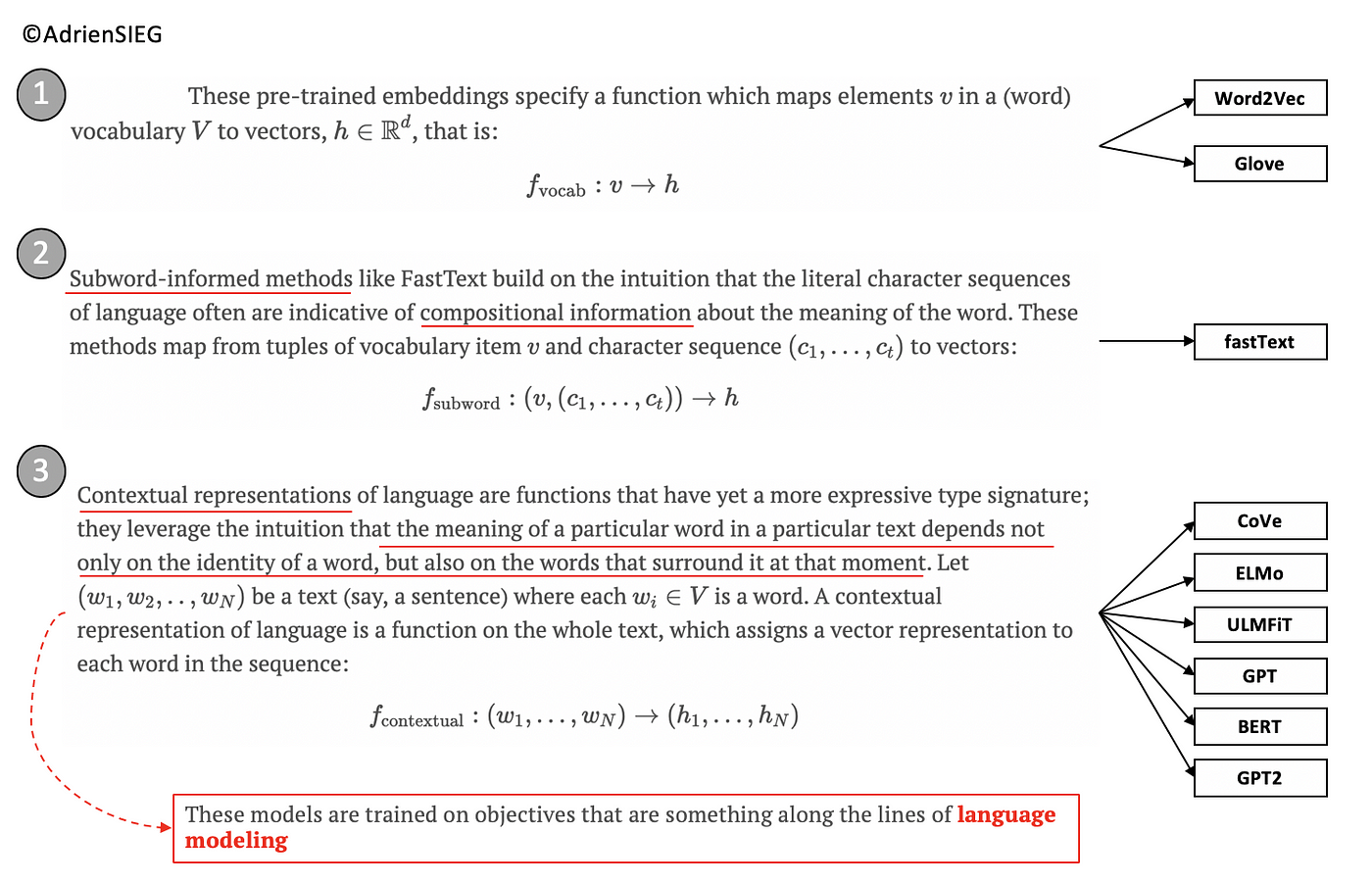

FROM Pre-trained Word Embeddings TO Pre-trained Language Models — Focus on BERT | by Adrien Sieg | Towards Data Science

![PDF] Language Models with Pre-Trained (GloVe) Word Embeddings | Semantic Scholar PDF] Language Models with Pre-Trained (GloVe) Word Embeddings | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/38438103e787b7b2d112596fd14225872a5403f3/3-Figure1-1.png)